Introduction

If technology once again fails you, it is important to be able to know how to get from place to place by using an "old school" map. In order to do this though we first must make this map. We will go into greater detail about making these maps later on in this blog post, but the major factor which will make this map usable is the grid we will be adding to it which will have a set amount of feet/degrees between each grid mark. This map will be used to locate points at the Priory in Eau Claire, Wisconsin. We will use a pace count to create a trail to locate the said points at the Priory.

Pace Count: A pace count is a set number of steps, starting with either your right or left leg, and then counting every time your opposite foot hits the ground. So if you start with your right leg, you count every time your left food hits the ground.

Methods

In order to understand a map we must know roughly how many paces were in 100 yards. Walking 100 yards and counting every time you go one pace I had a count of 62 paces per 100 yards. This information will be useful in the next lab as that is when we will be going out into the field.

The second part of this lab was to create two base maps, the first using feet as the unit of measurement and the second using degrees. In order to create the maps we used data from the Priory Geodatabase created by Dr. Joseph Hupy of the University of Wisconsin-Eau Claire Geography and Anthropology Department. The information I used for my maps was aerial imagery from ESRI ArcMap and then the Priory Boundary to indicate the study area. A 50 foot by 50 foot grid was over laid on the map as well.

The two maps also contained very important elements such as;

-North Arrow

-A Scale Bar in meters

-Relative Fraction Scale

-Projection and Coordinate System

-Grid, properly labeled in correct units

-Background layer (aerial imagery)

-Data Sources Used

-And lastly a Watermark (creators name)

Results

Map 1 uses the NAD 1983 UTM Zone 15 projection

Map 2 uses GCS_WGS_1984

Map 1 has 50 foot grid dividers

Map 2 has the same but instead of feet it is listed in Degrees

Sunday, October 25, 2015

Saturday, October 17, 2015

Lab 4: Unmanned Aerial Systems Mission Planning

Introduction

The objective of this assignment is to introduce the

students to how Unmanned Aerial Systems (UAS), Unmanned Aerial Vehicles (UAV),

mission planning and get a basic understanding on how software such as Mission

Planner, Real Flight Simulator and Pix4D work. This assignment will be broken

up into two separate parts. The first part being, Dr. Hupy will manually fly a

DJI Phantom over a study area taking pictures every so often, and then the

second part will be using the software we have at the University of Wisconsin-Eau

Claire to process the data. Mission planning and Flight Simulators will also be

used during this lab.

Before entering the field we learned about several different

UAS/UAV systems, including fixed wing, multi-rotor (quad/hex copters) and what is the

difference between an Unmanned Aerial Vehicle/System and an RC (radio control)

toy plane.

Fixed Wing

The very first thing we learned about a Fixed Wing (wings

that do not move) UAV was that they are not RC planes. The major difference

between the Fixed Wing and an RC plane is that the RC plane does not have a

computer on board which can be used to collect data. The Fixed Wing UAVs at the

University of Wisconsin-Eau Claire have Pixahwk, which are the brains of the

system. Everything the Fixed Wing does is related back to the flight station,

whether it is being remote controlled or the on board computer is controlling

the pre-determined flight plan, all the data is relayed back to the station.

Newer UAVs are now starting to use replaceable/rechargeable batteries to lengthen

the amount of flight time you can get, instead of having one fixed battery

which has to be recharged every so often. The Fixed Wing we were shown had an

average flight time of about 1.5 hours and have a cruising speed of over 14

meters per second (m/s). Lastly, we were shown how to get one of these Fixed

Winged vehicles into the air, they do not have wheels and are too heavy to

throw, so they have to rely on a bungee cord type launch mechanism, making it

very difficult to use in small spaces such as cities and dense forests.

Multi-Rotor

In the lab we were shown two different multi-rotor UAVs. One

had four rotors (quad-copter) two of the propellers were silver and two were

black. This was to indicate that they spun opposite directions. Although not

mentioned in class, I believe they spin opposite directions to assure a smooth

and stable flight. The spinning of the propellers in opposite directions also

makes this device capable of going any direction with the ease of a switch.

The other type of multi-rotor UAV we saw was a 6 engine one.

This one, much heavier than the previously shown UAVs required two large

batteries to operate with. This UAV has a much larger payload then the others,

but with the increase of payload, we will also see an increase in power usage.

The six-rotor UAV thus only has a flight time of about 35 minutes, less if you

are attempting to carry some heavier equipment.

With all three of the seen UAVs, an increase in speed then

means that we will see an increase in turning radius. The multi-rotors are

capable though of slowing down and being able to hover, thus eliminating the

large turn radius, but the fixed wing UAV is not capable of hovering, otherwise

it will stall, so it must use a large turn radius if it is going to fast.

Part 1: Demonstration

Flight

Under the University of Wisconsin-Eau Claire Walking Bridge,

Dr. Hupy flew his DJI Phantom unmanned aerial vehicle (UAV). The purpose of

this demonstration flight was to demonstrate how a UAV is flown as well as the

tools and applications which could be used in this kind of situation. On board

the DJI Phantom was a camera which can take pictures of what is directly below.

The DJI took several pictures, a switch on the controller initiated when the

pictures would be taken. In larger study areas, or different model UAVs, cameras

can have settings to take pictures every so often (usually one every 0.7

seconds).

The DJI took over 200 images of two separate study areas;

one study area was east of the walking bridge, capturing several parts of the

shore, lake and grass areas. The other study area was west of the walking

bridge where a couple students used rocks to make a large scale 24 with a

circle around it.

Later on these images will be used in the program Pix4D to

create a Digital Surface Model (DEM).

DJI Phantom (figure 1)

Pros

-The DJI phantom come as a basic quadcopter starting at

$500, but can be upgraded to have a gimbal and a camera with the price still

being under $2000.

-Relatively easy to fly for someone who has never flown one

before.

-Due to its small size, it is very portable

Cons

-Not a whole lot of control

-installation can be difficult

Fixed Wing Vehicles (figure 2)

Pros

-Long Flight Time

-Larger Payload

-Multiple different instruments can be installed to it

-Stable in Windy conditions

Cons

-Long set up/prep time

-Needs a large takeoff area

-Requires large turn radius

Applications

-Precision Agriculture Mapping

-Large Area Mapping

-Ozone Mapping

Pros

-Small Turn Radius

-Easy for beginners to fly

-Multiple sensors can be attached to it

Cons

-Small payload capabilities

-Shorter flight time

-Not stable in windy conditions

Applications

-Flying over volcanoes

-Asset Inspection

-Live Streaming events

when I was at Whistling Straits watching the second round I got to get an up-close view of one of the multi-rotor UAVs they were using to get shots of some of the holes along Lake Michigan.

when I was at Whistling Straits watching the second round I got to get an up-close view of one of the multi-rotor UAVs they were using to get shots of some of the holes along Lake Michigan.

-And one day delivering small boxes from

Amazon...

Part 2: Software

After the seeing the DJI fly around on the banks of the

Chippewa River, we went back into the labs at the University of Wisconsin-Eau

Claire to learn about some of the software we can use to process images, create

flight plans and even use flight simulators. In order to process the images we

collected, we are going to use a program called Pix4D. This image processing

software was used with the DJI Phantom to create a 3D view of the images taken.

The software we used to create a Digital Elevation Model

(DEM) along with other features, with the pictures taken by the DJI Phantom Vision

Drone. Of the 200+ images taken, I used a total of 19 to create a point cloud

file, which using outside programs such as ArcMap; we can turn it into a DEM

raster.

The first step of using this software is to select the

pictures you want to use from the file of pictures taken. Again for this I used

19 pictures taken, each containing a portion of the Circled 24 on the bank of the Chippewa River (figure 5).

The next step in this process was to make sure all of the

properties for each image were correct. Since the camera used stored data about

altitude and coordinates, this step only required a simple click of the mouse

on the next tab (figure 5).

|

| Figure 5: A list of images ready to go onto post processing containing multiple forms of data |

|

| Figure 6: A list of the different types of maps you can make depending on how you run your data |

|

| Figure 7: The mosaic and Digital Surface Model created from processing the images collected by the DJI Phantom |

|

| Figure 8: One of the maps created showed how much overlap there was between the images |

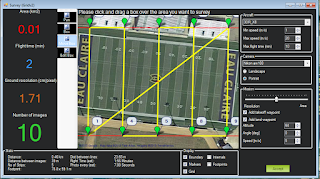

Mission Planner (figures 9-11)

Many newer UAVs have on board computers which can fly

themselves, well sort of fly themselves. In order for them to fly themselves we

have to use a program called Mission Planner. Mission Planner is a computer

software where you can plan your flights and figure out how much time it will

take to fly as well as how many pictures you will have to take.

With the risk of other commercial or private flying instruments

and vehicles, mission planning is very important. Typically “Drones” have a bad

reputation in the eyes of the public, so planning and getting everything all

set up is even more important. On the Mission Planner planning page, there are

multiple red circles,

|

| Figure 9: Opening Screen of Mission Planner |

|

| Figure 10: Study area for UAV flight |

|

| Figure 11: Proposed flight plan for a flight to take place in the Study Area |

The worst thing that can happen to a UAV in terms of expense

is crashing it and totally destroying it because you are not familiar with how

it works. Luckily, we have Real Flight-Flight Simulators in the lab. This way

you can practice (crash) all the UAVs you want without having to worry about

damaging $1000s worth of equipment.

Real Flight is one of the most lifelike flight simulators on

the market; using a similar control to a real UAV or RC vehicle it gives you

the most accurate type of flying. You are able to choose from just about any

type of vehicle imaginable. Flight options include hex copter, quadcopter, helicopters,

all different types of planes, a gator driving a wind boat, and even a paper

airplane.

Part 3: Scenarios

"A power line company spends lots of money on a helicopter company monitoring and fixing problems on their line. One of the biggest costs is the helicopter having to fly up to these things just to see if there is a problem with the tower. Another issue is the cost of just figuring how to get to the things from the closest airport."

First let us run through what we are trying to accomplish with this scenario. We want to come up with a cost efficient way to monitor and fix power lines.

The biggest issue here is the helicopter. Helicopters are expensive to buy, fly and maintain. They need take off space, which often times has to be done at an airport, they need expert pilots who are capable of flying them in such tight spaces. Very dangerous to fly near power lines, both for the power line and the people around. Lastly, if you are only checking to see if there is damage and there is none, you just wasted all that time and money on something that was no problem.

Through this lab exercise, I can suggest the use of Unmanned Aerial Vehicle for this scenario. Most likely a mutli-rotor vehicle because they can hover in place, easily maneuverable, they do not need a whole lot of take off space and can be relatively cheap (compared to a helicopter).

This video, not in English, shows a prime example of how a UAV can be used to inspect power lines with much more ease then the use of a helicopter.

"A power line company spends lots of money on a helicopter company monitoring and fixing problems on their line. One of the biggest costs is the helicopter having to fly up to these things just to see if there is a problem with the tower. Another issue is the cost of just figuring how to get to the things from the closest airport."

First let us run through what we are trying to accomplish with this scenario. We want to come up with a cost efficient way to monitor and fix power lines.

The biggest issue here is the helicopter. Helicopters are expensive to buy, fly and maintain. They need take off space, which often times has to be done at an airport, they need expert pilots who are capable of flying them in such tight spaces. Very dangerous to fly near power lines, both for the power line and the people around. Lastly, if you are only checking to see if there is damage and there is none, you just wasted all that time and money on something that was no problem.

Through this lab exercise, I can suggest the use of Unmanned Aerial Vehicle for this scenario. Most likely a mutli-rotor vehicle because they can hover in place, easily maneuverable, they do not need a whole lot of take off space and can be relatively cheap (compared to a helicopter).

Sources

https://www.aibotix.com/en/inspection-of-power-lines.html

http://www.cbsnews.com/news/amazon-unveils-futuristic-plan-delivery-by-drone/

http://www.cbsnews.com/news/amazon-unveils-futuristic-plan-delivery-by-drone/

http://copter.ardupilot.com/

http://fctn.tv/blog/dji-phantom-review/

https://pix4d.com/

http://www.questuav.com/news/fixed-wing-versus-rotary-wing-for-uav-mapping-applications

http://www.realflight.com/index.html

Saturday, October 3, 2015

Lab 3: Conducting a Distance Azimuth Survey

Introduction

What will happen if you need to conduct a survey using a

fancy GPS and survey stations when the technology fails? Or maybe you are going

to a different country and Customs cease your equipment for investigation. It was not all that long ago that humanity did

not have this kind of technology and they seemed to survive just fine. In order

for us to combat this metaphorical issue we used a tool which could calculate

the distance between you and the object as well as calculate the azimuth.

Using a sophisticated Trupulse Laser Range Finder, we could

point to any object we wanted and in turn the range finder would give us

multiple values, the only ones we were interested in were slope distance (SD)

and azimuth.

Study Areas

|

| Figure 1: Study Area 1- Done during class time, Results-Failed |

The second study area we used was located on the northeast

side of Phillips Science Hall located by the bus stop at the circular drop off

point for vehicles (44.797638,-91.498991)

Figure 2 and 3. We shot a total of 20 points from this location. Our

features included trash cans, black street lights, wooden street lights and

silver street lights.

|

| Figure 2: Study Area 2: Facing Campus we collected Street lights and garbage cans from this location |

|

| Figure 2: Study Area 2, reverse direction: Here we collected several different street pole types |

The third study area we used was located in the campus mall.

Choosing the large circle located in the large open area of the mall because of

its easily identifiable properties on a map (44.79782,-91.500787) Figure 4. At this location we shot a

total of 51 points (bringing out total to 71). We shot presumably every stone

located in the amphitheater and recorded them as either single stone, double

stones or triple stones depending on how many were grouped together.

|

| Figure 4: Study Area 3: Campus Amphitheater, here we collected data on all of the stone seats (single, double and triple) |

Our final study area was located about 20 feet away from the

northeast corner of Schofield Hall (44.798761,-91.499713) Figure 5. Here we shot our remaining 29 points to bring our total

number of points shot to an astonishing 100 points, taking about an hour to do

so. At this location we shot a variety of objects including benches, trash cans

and assorted lamp posts (and came across one person who called us weird because

they thought we were spying on people).

|

| Figure 5: Study Area 4: Here we captured several benches and light poles |

Each of the study areas chosen all served a purpose, we

wanted to pick areas that were relatively open and away from buildings, that

way when we put the points on a map they are all going to be spread out and not

have 15 points all in a straight line looking one direction. The features we

chose to shoot, we wanted something you could see on a map, that way we could

see how precise our data was.

Methods

All of our data points we collected using a Trupulse Range

Finder (Figure 6). The first step

was to acquire this piece of equipment from the UWEC Geography Department. Once

acquired, we went off to each of our three study areas listed above in the

study areas section of the blog. We had a slight idea in our minds of what we

wanted to shoot, but would not make up the decision until after we got to our

location on what else we could shoot.

|

| Figure 6: Trupulse Range Finder, equipment used during data gathering |

Once at the study area, we would use the Trupulse Range

Finder and look through the scope. Through the view finder there was a square

cross hair type of image on the lens, this is what you would line up with what

you were trying to find the distance and azimuth of. Once the desired object is

in the cross-hairs we hit a small button on the top side of the range finder labeled FIRE. This would cause a

laser pulse to shoot out and gather data of the object. Using the arrows on the

side of the range finder to scroll through the data we found the slope distance

and azimuth of the desired object. One person would be using the range finder

and saying the distance and azimuth (as well as the object being shot) while

the other person recorded the data into a google spreadsheet (Figure 7). This would be repeated 99

more times.

|

| Figure 7: Document containing all the information gathered from the field |

The next step was to find the exact coordinates of each of

our three starting locations. This was done using Google Maps and right clicking

on the exact area of study. An option would pop up saying “What is here” and

clicking that would give us the X and Y coordinates to the 6th

decimal place. We put that information into our Google Spreadsheet and then

copied and pasted the entire sheet into Excel. We did this so we could work

with the data in ESRI ArcMap.

In a geodatabase already created in ArcMap, right click and

scroll down to import and select table (single). After doing this our data was

imported to ArcMap and was ready to be used. The first tool we had to use was

called Bearing Distance to Line Tool

(Figure 8 and 9). This tool would give a list of options to choose from

including X, Y, azimuth and distance options which had to be filled out

properly in order for this section to work.

|

| Figure 8: Bearing Distance To Line tool will give us the lines from the starting point to the feature point |

|

| Figure 9: End result from running the Bearing Distance to Line tool |

The next step after this was to take those vertices and make

them points. This step requires the Feature

Vertices to Point Tool (Figure 10 and 11). For this tool there is only

three options. Input Features, which is our feature we just created in the

previous step, Output feature class, which is for saving purposes and lastly

Point Type. For this category make sure to select END for the option otherwise you will end up with double the number

of points, since it will take both the start and ending points.

|

| Figure 10: Feature Vertices to Points will give the points at the end of the lines |

|

| Figure 11: The end result of using the Feature Vertices to Points tool, layered over the previously ran tool |

In order to make it possible to show what each point

represents we have to join the points with our Excel file. This is a simple

join based on the Object ID. After running the join, I created a map showing

the three study areas and what each line was pointing to represented as a different

color (figure 12).

|

| Figure 12: Final product of feature points. |

Step Recap

1. Gather Trupulse Range Finder

2. Locate Study Area

3. Use Trupulse Range Finder on desired objects

4. Record data in Spreadsheet

5. Record coordinates of starting locations

6. Import data in Excel Spreadsheet

7. Import data into ArcMap Geodatabase

8. Use Bearing Distance to Line tool in ArcMap

9. Use Feature Vertices to Point tool in ArcMap

10. Join the points feature class with the table by Object ID

11. Create visual pleasing maps

Discussion

On our map we had three different study areas, mentioned

above. Two of the three study areas proved to be pretty accurate, but the

campus mall Amphitheater one seemed to be off by several degrees. When we were

testing the equipment earlier in the week we found that underground wires and Wi-Fi

signals could be distorting the sensor. Here I believe that is the issue. All

of the points, when looking at the map, seem to be off by about 15-20 degrees

and about 3 feet short of the target. The other two study areas went off without

a hitch.

In order to rid of any biasness we kept the same person

capturing the data through the range finder and the other person kept to typing

the data.

Unfortunately, the most recent imagery used shows Centennial

Hall still being constructed. This lead to several points looking like they

were construction equipment and not the light posts or tables that our actually

there today.

Overall we spent about an hour collecting data points in the

field. It was around 8am when we started so some points were hard to see,

especially ones which were east of us, looking through a range finder when the

sun is in direct line of your object is not fun, and incredibly dangerous if

done for too long.

Frequently Asked

Questions

Q: What am I looking for while scrolling through the range

finder?

A: There are 2

different fields we are looking for one is labeled SD meaning Slope Distance,

Slope distance takes into account the vertical and horizontal distance of the

objects. The second set we are looking for is labeled AZ. This is the azimuth

or how many degrees 0-360 we are away from True North. 0/360 would be north, 90

east, 180 south and 270 west.

Q: What happens if I use

the ALL point type on Feature Vertices to Points tool

A: It will double the

number of points you have. Instead of just calculating the end points, which is

what we wanted to do in this lab, it will calculate the start and end point

resulting in 100 features become 200. This will then throw off the table join,

resulting in 50 of your points not having a feature description.

Q: There is no current base map with up to data UWEC

imagery?

A: In order to combat

this issue, I used two images from the Geospatial folder on the UWEC Geography

Departments Servers. Although it is not completely up to date, it still gave me

what I needed, something the other base layer data could not give me, unless I was

zoomed way out.

Results

|

The image above shows the final product of all the study

areas. In total we tagged 6 benches (red), 19 black street lights (orange), 2 cigarette

receptacles (light orange), 9 double stones (yellow), 8 Silver Street Lights

(green), 34 single stones (blue), 11 trash cans (purple), 8 triple stones

(pink) and 3 wooden street lights (grey).

Although we were careful with the data collection, we still

had a little skewness to our data. Again this could be contributed to just

about anything. It could be from Wi-Fi, or underground cable wires, or it was

hazy and foggy during our time of data capturing, there is an infinite number

of possibilities which could skew the laser, even human error was a

possibility.

Conclusion

Overall, this is a pretty low tech form of mapping features.

We were of course using a very expensive tool for this, but it could easily be

done using a ruler tape and compass. That would have taken us a lot longer than

the one hour we spent outside collecting data though.

This could come in handy if GPS technology is unavailable or

if something happened to your equipment making it no longer usable. Although in

a world with ever growing technology this tool, range/azimuth finder, has been

replaced by far more sophisticated technologies which can easily take out the

human error.

Subscribe to:

Comments (Atom)